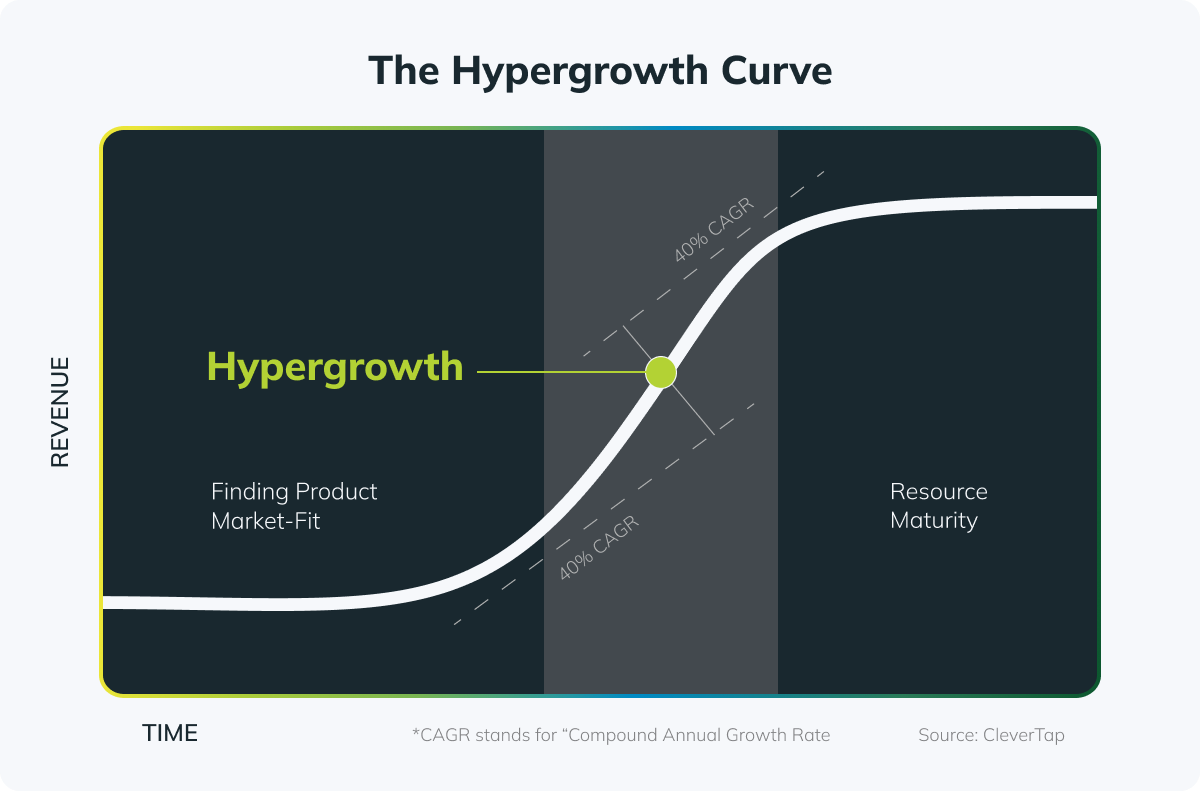

In the last several months, Paxos has hit a stage of development commonly known as “hypergrowth” due to the mainstream adoption of digital assets. Under heavy acceleration, tech companies need to rapidly evolve software systems from earlier-stage minimal viable products to enterprise-grade platforms that are scalable and reliable. These transformations are extremely challenging, and the challenges extend beyond software development to organizations as a whole.

Perhaps the biggest obstacle in engineering is addressing technology debt. A lot can be learned from dealing with it. When companies experience hypergrowth, platforms must hyperscale to keep up with demand and platform volume. At Paxos, we’re upgrading our platforms to avoid key pitfalls that would obstruct our achievement as the company transforms from start-up to scale-up. To do that, we’ve established a taxonomy of notably troublesome patterns found in MVP distributed systems.

In transitioning distributed systems from MVP to scale-up, many of the problems that confound scalability and reliability also degrade velocity (developer efficiency/productivity), devex (developer experience) and testability. Such impairments obstruct overall business growth just as significantly as impaired production service levels.

In this blog, we dive deep into technology debt in distributed systems, software design and implementation to show what Paxos aims to find and resolve in order to get ahead of hyperscaling. We do not cover other important types of debt, notably security debt – these are separate topics in their own right that we will address in the future.

The Tyranny of the Urgent, the Evil of Shortcuts

It’s well known that shortcuts cause most of the trouble – shortcuts in design, implementation, deployment and infrastructure/architecture. This type of technology debt is also known as “decision debt.” Counter-intuitively, with hindsight, such decisions often do not significantly benefit time-to-market, even in the moment they’re taken. Even worse, compensating follow-up work is often deferred indefinitely until the shortcut presents a direct obstacle to product development.

In situations where shortcuts are being contemplated, at Paxos we’ve found it useful to advocate a “barbell” strategy of choosing one of two extremes – either take the fastest genuine shortcut for MVP time-to-market purposes and consciously build up the technology debt, or design and implement for full scalability from the beginning. Any middle ground is likely to result in larger tech debt without significant time-to-market benefits.

Patterns in Technology Debt

There are also notable bad patterns in addressing tech debt that we’ve observed in the field. Resolving these types of tech debt before they begin to obstruct platform scaling is key to enabling hypergrowth. These patterns include:

1. Non-deterministic Behavior Under Load

These often arise with timing-dependent distributed code that assumes remote work will complete within a fixed time interval. This is obviously a bad idea and well-known to be unsafe. Regrettably it’s also a shortcut often taken, particularly in pre-production test harnesses “invoke an RPC; sleep N seconds; proceed”. The slightly more reliable loop “while incomplete sleep N seconds; recheck remote completion” is almost as troublesome. The consequences for velocity and devex of this kind of code can be surprisingly severe.

Polling for success instead of an async callback / continuation / completion

- Throttles scaling and throughput

- Introduces jitter due to pausing for multiples of a discrete polling interval

- Prevents full-speed runs of stress/capacity testing workloads

- Polling is almost never the right solution, even in a test harness – implement a mechanism for asynchronous completion!

Sleeping (synchronously blocking) to rely on remote completion within a time interval

- Throttles scaling and throughput

- Prevents full-speed runs of automated end-to-end test suites

- Degrades devex and reduces velocity

- Timeout code paths are often untested (since code executes within the expected timeframe most of the time), and the path taken is timing-dependent and not deterministic, inducing fragility. A secondary effect of this kind of test fragility can be that teams are misled about the causes of infrequently exhibited underlying problems, as they mistakenly assume that test failures arise from timing issues.

- Sleeps should rarely be used, even in test harnesses

- Asynchronously scheduling interval-based work (such as timeouts and heartbeats) is not sleeping!

2. Weak Completion Guarantees

This could be called “weak delivery guarantees”, since it typically concerns delivery failures of RPCs and/or queued messages. This problem is often accompanied by it’s evil twin in many applications, which is “undesirable stale delivery” – due to programmers not designing their use of message queues to enable “abort-on-queue” or “cancel-on-queue”. A prominent example of this occurs in trading, ordering, or auction systems where stale orders are generally mispriced or misplaced. These orders will often be canceled by the originator during stalled processing due to a failover or some other hiccup, and those chasing cancellations should overtake the original orders that remain in the queue.

Enqueuing without any mechanism for asynchronous continuation and failure handling

- This often arises due to over-simplistic use of queuing APIs by senders, who “queue and forget”, without recording queue position information

- Without persisting the keys to enqueued messages, abort-on-queue and selective re-enqueue on restart/failover is usually impossible (an MQ key is typically a sequence or serial number identifying the position in the queue, combined with an individual queue identifier)

Overlapped slow RPCs or RDBMS Queries

- Inexperienced developers often neglect that most DBC SQL queries are blocking RPCs at some level; few DBC/language bindings are asynchronous “down to the wire” (r2dbc is a notable exception)

- A typical shortcut to improving throughput is naive adoption of a connection/thread pool to permit concurrent overlapped execution

- Unless careful attention is paid to isolation/sharding and connection/thread affinity, later messages can overtake earlier ones, undermining ordering; this approach risks inadvertently becoming meaninglessly concurrent processing of intrinsically serialized workloads

- Overtaking may only be reliably observed under high-intensity workloads such as stress testing – which places this pattern in the additional category of non-deterministic behavior under load

- In general, any RPC or remote DB query requiring strict ordering within a particular scope such as a user, login session, market, etc. should be carefully sharded, or better, enqueued with asynchronous continuations.

Non-idempotent RPCs and/or enqueues

- A common approach to guaranteeing delivery following failover is to resend/replay messages at startup – and that in turn is a lot safer and simpler with idempotence; APIs lacking idempotence can severely complicate reliable recovery following failover or restart.

Inefficient read-modify-write idempotence

- Idempotent code often begins a transaction, reads a value, then conditionally writes (based on whether or not the transaction was already executed); this kind of code typically requires a sequence of two or more blocking RPCs to a DB

- Many such cases are better implemented with a single operation that is an insert/update on a table with a uniqueness constraint that cannot be violated, such that the operation will reliably fail if repeated

3. Impaired Scalability

Multiple sequential RPCs that should be batched together, paged and/or queued

- Looping through multiple sequential RPCs is often observed in MVP code, particularly code that builds tables, exports CSVs, etc.; this kind of repeated synchronous blocking is a major obstacle both to production scaling and performantly running test suites

- If the API design is completely out of the control and influence of the team developing the client code, then the best option may be to delegate these calls to API-specific threads on the other end of a bidirectional pair of internal queues

- In almost all other circumstances, the destination API should be changed to permit remote execution of an entire batch of calls with a single invocation, with support for remote paging often highly desirable.

4. Impaired Agility

Multiple services always deployed together as a tightly-coupled bundle – a monolith!

- When initially architecting platforms, it’s often forecast that individual services will be shared and reused by other future platforms that don’t exist yet

- However, such platforms become bundles of services that are monolithically version-controlled and deployed, without any serious attempt to enable independent release and deployment of individual services

- This “all or nothing” phenomenon also results in platform upgrades being held hostage by a single outstanding defect in any individual service

- One major reason to deploy as a bundle is to mitigate the risk that server-side API upgrades will break clients using older versions – but this shortcut defeats the purpose of microservice decomposition in the first place!

- … meanwhile internal API boundaries typically shift dramatically during early architectural evolution, obsoleting earlier work

- Independent version control and deployment requires backwards-compatible internal APIs and message formats for interoperability with older versions

- As with much of the other guidance here, a “barbell” approach of choosing one of two extremes is advocated – either adopt fully independent microservices ab initio, or take the genuine shortcut of a single monolithic service with clean internal API boundaries that can be a foundation for future decomposition

Generally, fully independent microservices can be deferred until individual services will actually be used by multiple applications, requiring them to be version-controlled and deployed independently with internal APIs as contracts

5. Impaired Reliability

Services that purport to be independent but share a remote DB

- In an MVP situation, developers will often take the shortcut of stashing data in a shared DB and retrieving it elsewhere in other services

- The DB becomes a covert/unintended inter-service communication and synchronization mechanism, and this can completely undermine microservices decomposition and architectural integrity, as well as contributing to mysterious data inconsistencies

- With the exception of reference and static data, shared DB access is rarely a good idea; even in the “static lookup data” case, such data is rarely truly immutable, and the circumstances in which it changes need to be carefully considered

Failure to shut down gracefully and reliably, including when (soft) killed

- Services engaged in a lot of RPC and queuing activity often hang on shutdown, waiting interminably for an event that will never occur because a part of the service has already exited, a deadlock that only occurs in shutdown is occurring, or for some other shutdown-specific reason

- Developers often take the view that ensuring prompt and orderly shutdown of a live service is complex, time-consuming and optional. This indiscipline causes numerous problems:

- Inability to reliably troubleshoot resource leaks, including memory leaks (tools like valgrind require a complete and orderly shutdown)

- Inability to reliably and rapidly orchestrate failover and restarts in testing

- Delayed failover and prolonged outages as fencing in clustering falls back slowly to a hard kill

- A key technique when shutting down queues in an orderly fashion is the use of sentinel messages to reliably drain and complete outbound queues – a sender stops enqueuing after the sentinel, waits for the receiver to acknowledge it, then continues to shut down knowing that nothing pending is left in the queue; some MQ frameworks have this capability built-in

- Implementing cluster health checks (at Paxos we mostly use Kubernetes) can help to ensure adherence to graceful shutdowns

Failing to test alerting by simulating alert conditions

- Alerting on errors, inconsistencies or breaks without synthesizing those error conditions in automated testing to ensure that the alerting works

- This gap in test coverage can dramatically increase the severity of production incidents, where the cause of an outage could have been alerted and responded to, but the alerting itself had never been simulated or tested, and failed when needed!

This article is not at all exhaustive, but it highlights our recent experience at Paxos. Hopefully, some of these patterns will resonate with other engineering teams experiencing high acceleration while transitioning software platforms from start-up to scale-up!

**A huge shout out to Zander Khan and Ilan Gitter for their contributions to this post.**

Join us in building a new, open financial system!

Paxos Engineering is in its first year of hyper-growth. That means there’s plenty of room for you to make an impact as we scale!

From technically fascinating work in crypto and blockchain to a highly collaborative, global culture that’s intentional about remote-first, sky’s the limit for you to grow your engineering career at Paxos.

Head to our Careers site to view our 200+ current openings today.